Reproduction of Towards Faster and Stabilized GAN Training for High-fidelity Few-shot Image Synthesis

(SLE-GAN)

- 1 minUsage

import sle_gan

G = sle_gan.Generator(output_resolution=512)

G.load_weights("generator_weights.h5")

input_noise = sle_gan.create_input_noise(batch_size=1)

generated_images = G(input_noise)

generated_images = sle_gan.postprocess_images(generated_images, tf.uint8).numpy()

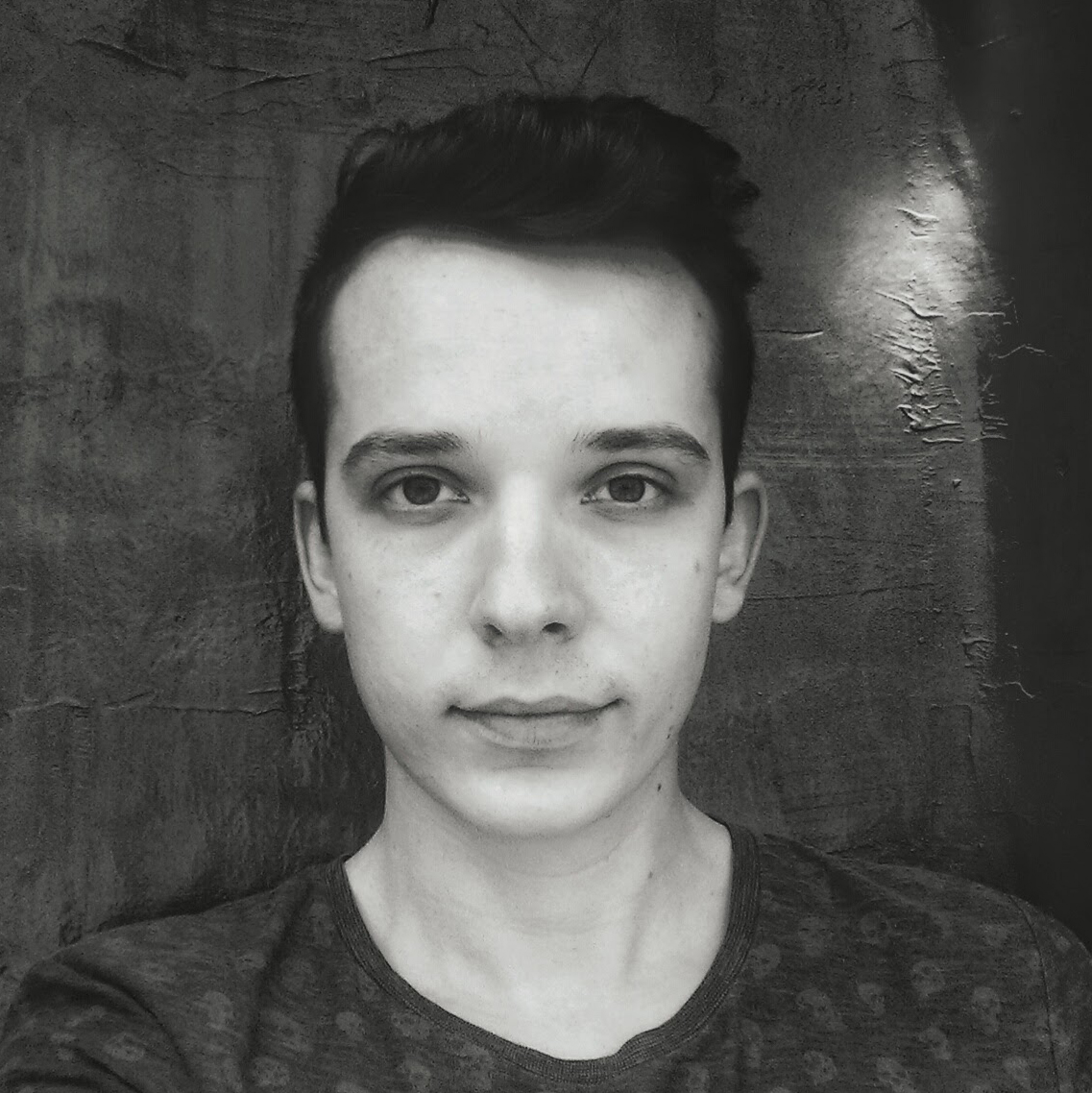

Generated Images

These are not cherry picked

Difficulties throughout reproduction

When I was reading the paper, and I started the implementation I felt that lot of small but important details are missing. You can guess some of that from previous experience but I would love to see a more detailed description on this subject for a 100% reproduction.

Some of these:

- Architecture discussion in details. How the smaller variants (resolutions of 256 and 512) are built up. Whuhc layers are skipped, has reduced filter numbers, etc.

- Training Schedule and some visualization of the loss(es) when training the network

- FID score throughout the training and comparison with the other discussed SOTA models

- Hyperparameters chosen for the different datasets

- Is there any change needed for training with small datasets (<1k images) and big datasets?